Bio

I’m a Master of Science student in Statistical Science at Duke University, with a strong background in Computer Science and Statistics from Wake Forest University. My research focuses on High Performance Computing (HPC), Efficient and Scalable Machine Learning, Numerical Methods, and other key subjects. As a Research Assistant at Duke’s CEI Lab, I work on interdisciplinary projects combining statistics, computer science, and applied mathematics.

Selected Publications

SADA Project

SADA: Stability-guided Adaptive Diffusion Acceleration

Ting Jiang*, Hancheng Ye*, Yixiao Wang*, Zishan Shao, Jingwei Sun, Jingyang Zhang, Jianyi Zhang, Zekai Chen, Yiran Chen, Hai Li

ICML 2025 (Poster)

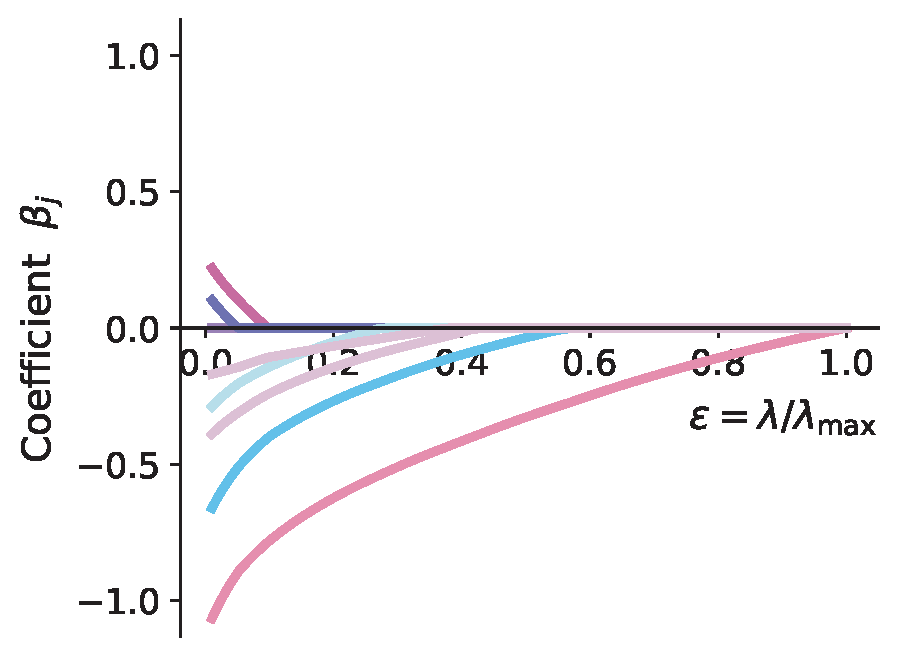

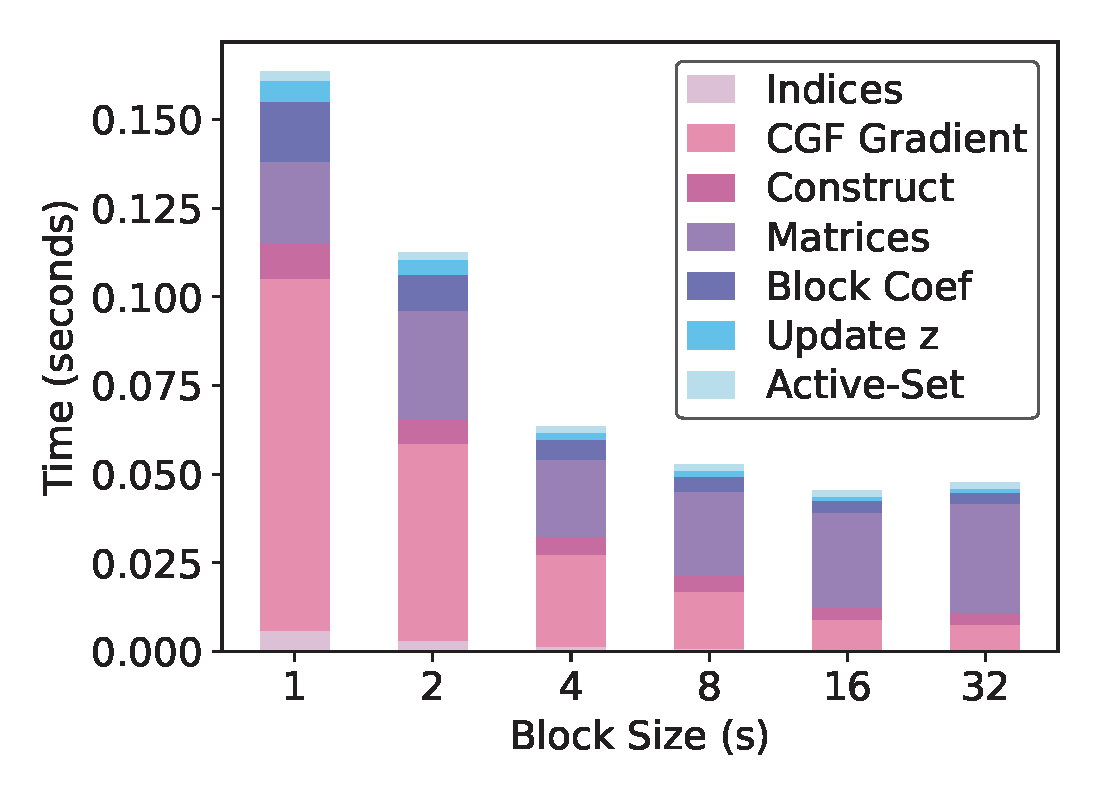

ECCD Project

Enhanced Cyclic Coordinate Descent for Elastic Net Penalized Linear Models

Zishan Shao*, Yixiao Wang*, Ting Jiang, Aditya Devarakonda

NeurIPS 2025 (Poster)

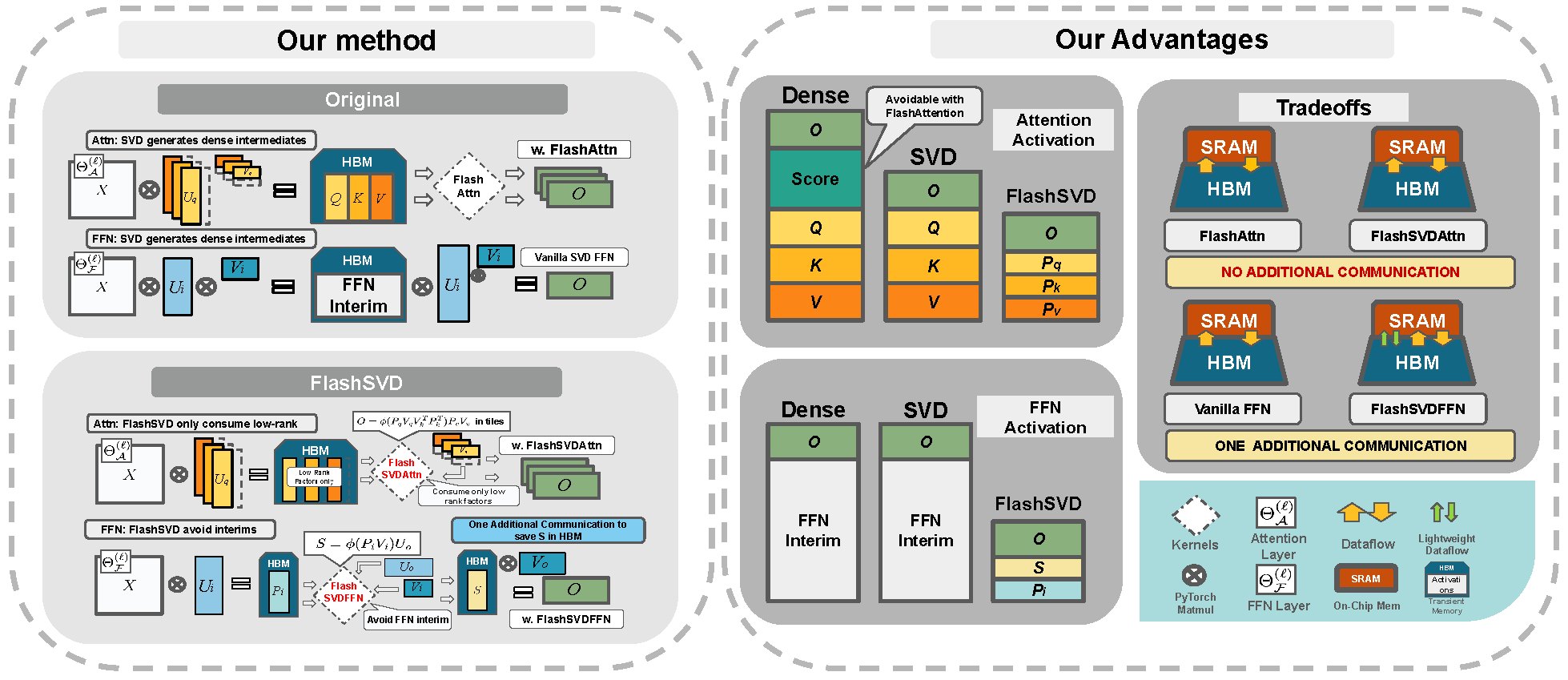

FlashSVD Project

FlashSVD: Memory Efficient Approach for SVD-Based Low Rank Model Inference

Zishan Shao, Yixiao Wang, Qinsi Wang, Ting Jiang, Zhixu Du, Hancheng Ye, Danyang Zhuo, Yiran Chen, Hai Li

AAAI 2026 (Submitted)

Education

Duke University, Durham, NC

- Master of Science in Statistical Science, Expected 2025

- Advisor: Dr. Yiran Chen

- Research Lab: CEI Lab (Computational Engineering and Intelligence)

- Relevant Coursework: Real Analysis, Advanced Numerical Linear Algebra, Numerical Methods, Bayesian Statistics, Advanced Statistical Inference, Predictive Modeling & Statistical Learning, Electrical Computing in Neural Networks, Advanced Stochastic Process, Advanced Hierarchical Modeling.

Wake Forest University, Winston-Salem, NC

- Bachelor of Science in Computer Science, May 2024

- Second Major (B.S.) in Statistics

- Dean’s List: All semesters, Summa Cum Laude

- Dissertation: “Communication Avoiding Coordinate Descent Methods On Kernel And Regularized Linear Models” PDF

- Advisor: Dr. Aditya Devarakonda

- Relevant Coursework: Python, C, R, Java, Statistical & Machine Learning, Statistical Inferences, Linear Models, Multivariate Analysis, Probability, Network Theory & Analysis, Advanced Data Structures and Algorithms, Assembly Languages, Computer System, Multivariate Calculus, Linear Algebra, Discrete Mathematics, Time Series Forecasting, Special Topics – Computer Vision, High-Performance Computing, Parallel Computing

Shanghai Weiyu High School, Shanghai, China

Academic Profiles

- Google Scholar - View my publications and citations